100 avatars in a browser tab: Optimizations in rendering for massive events and beyond

This post was initially printed on Decentraland

Introduction

Table of Contents [hide]

The Metaverse Competition was envisioned to be a milestone within the platform’s historical past, and consumer expertise needed to be as much as par: bettering the avatars’ rendering efficiency was paramount.

When the Metaverse Competition planning began, solely 20 avatars could possibly be spawned concurrently across the participant and, in the event that they have been all rendered on display on the identical time, efficiency degraded considerably. That onerous cap was on account of internet browser rendering limitations and to the outdated communications protocol, which was later improved with the brand new Archipelago solution. This might not present a sensible social pageant expertise for customers, so Decentraland contributors concluded that they needed to enhance the variety of customers that could possibly be rendered on display for the pageant to achieve success.

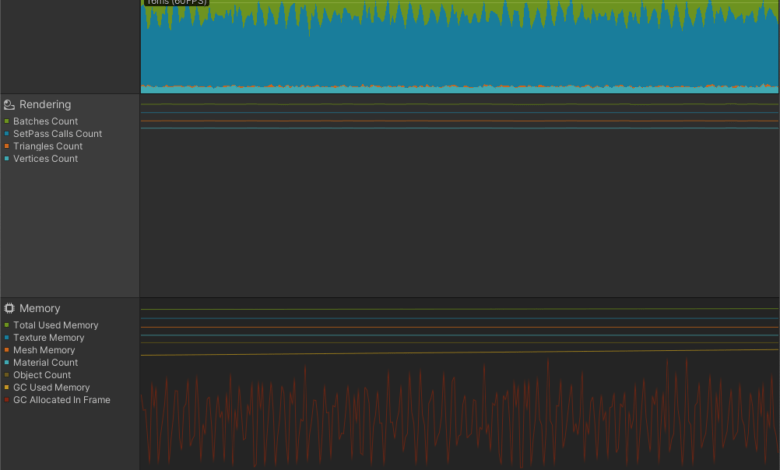

Explorer contributors started by placing a concept to the take a look at: that rendering (together with CPU skinning) was the principle wrongdoer of efficiency points brought on by having a number of avatars on display. The idea was confirmed after profiling efficiency with 100-200 avatar bots in a managed atmosphere.

In face of these findings, the purpose was to extend the utmost show of avatars from 20 to 100. Three efforts have been made in direction of that purpose.

- A brand new impostor system was launched. This method avoids rendering and animating distant avatars by changing them with a single look-alike billboard when wanted.

- A customized GPU skinning implementation was put into place, successfully lowering the CPU-bound skinning bottlenecks by an enormous margin.

- The avatar rendering pipeline was re-implemented from scratch, lowering the draw calls from round 10+ to a single draw name in the most effective case situation. Advanced combos of wearables may get the draw name rely a bit greater on account of render state change, nevertheless it wouldn’t go over three or 4 calls within the worst instances.

In line with benchmarking exams, these mixed efforts improved the avatar rendering efficiency by round 180%. That is the primary of many efforts to arrange the platform for mass occasions just like the Metaverse Competition, which greater than 20,000 folks attended.

Avatar Impostors

After implementing a software for spawning avatar bots and one other one for profiling efficiency within the internet browser, the contributors have been able to take that profiling knowledge and create the avatar impostor system.

Whereas superior scenes have been being crafted main as much as the pageant, a scene was constructed for testing functions. It contained sure components desired for the atmosphere: a stadium-like construction, some objects being always up to date, and no less than two totally different video streaming sources. All of this was accomplished utilizing the Decentraland SDK.

With all the pieces else in place, the proof of idea for avatar impostors was began.

After having the core logic working, the contributors began engaged on the “visible half” of the function.

First, a sprite atlas with default impostors was used for randomizing impostors for each avatar.

In a while, a number of experiments have been accomplished utilizing runtime-captured snapshots with every avatar’s angle in direction of the digicam. Nonetheless, texture manipulation in runtime within the browser proved to be extraordinarily heavy on efficiency, in order that choice was discarded.

Ultimately, the customers’ physique snapshot already current within the content material servers was used for his or her impostor, and the default sprite atlas was utilized for bots or customers with no profile.

Final however not least, some remaining results relating to place and distance have been carried out and tweaked.

Extremely frequented scenes like Wondermine proved invaluable when testing with actual customers.

Impostor system contributions are public and out there on the next PRs (1, 2, 3, 4)

GPU Skinning

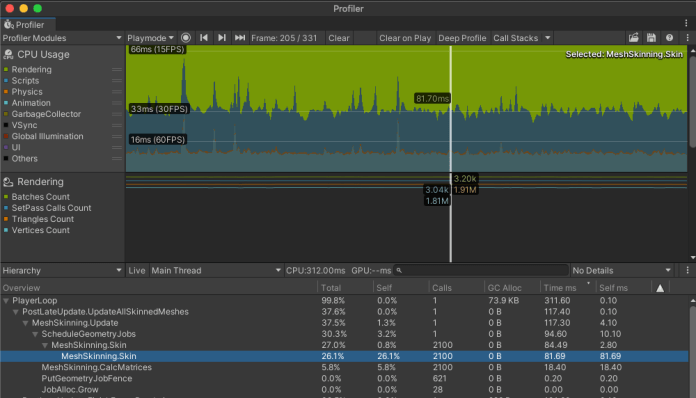

The Unity implementation of skinning for WebGL/WASM goal forces the skinning computations to be on the principle thread and, furthermore, it misses all of the SIMD enhancements current on different platforms. When rendering a lot of avatars, this overhead piles up and turns into a efficiency subject, taking as much as 15% of body time (or extra!) when making an attempt to render a number of avatars.

On many of the GPU skinning implementations described on the web, the animation knowledge is packed into textures after which fed into the skinning shader. That is good for efficiency on paper, nevertheless it has its personal limitations. As an example, mixing between animations is sophisticated, aside from having to put in writing your personal customized animator to deal with the animations slate.

Because the skinning is so poorly optimized within the WASM goal, contributors discovered {that a} efficiency enchancment of 200%~ might be noticed even with out packing the animation knowledge into textures. Because of this a simplistic implementation that merely uploads the bone matrices right into a skinning shader per-frame is sufficient. This optimization was enhanced much more because the farthest animations are throttled and don’t add their bone matrices on each body. All in all, this strategy gave avatars a efficiency increase, prevented rewriting the Unity animation system, and stored the animation state mixing assist.

The throttled GPU skinning might be seen in motion on the farthest avatars in these movies on the Metaverse Competition:

The GPU skinning contributions are public and out there on the next PRs (1, 2, 3, 4).

Avatars Rendering Overhaul

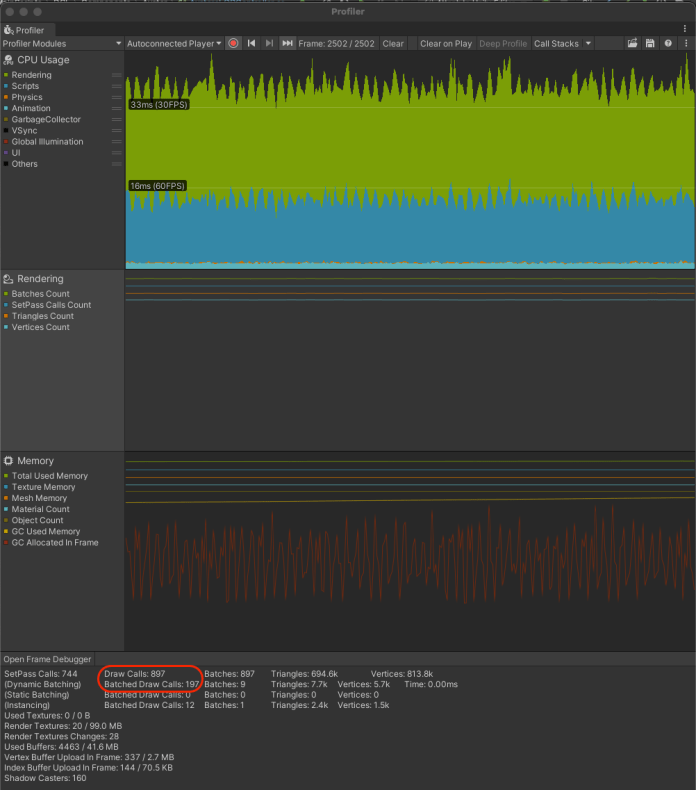

Avatars was rendered as a sequence of various skinned mesh renderers that shared their bone knowledge. Additionally, some wearables wanted a separate materials to account for the pores and skin coloration and the emission. This meant that some wearables wanted two or three draw calls. On this situation, having to attract a whole avatar may contain 10+ draw calls. Draw calls are very expensive in WebGL, simply corresponding to cell GPU draw name prices—or maybe even worse. When benchmarking with greater than 20 avatars on display, the body charge began dropping significantly.

![]()

The brand new avatar rendering pipeline works by merging all of the wearable primitives right into a single mesh, encoding sampler and uniform knowledge within the vertex stream in a manner that enables the packing of every of the wearable supplies right into a single one as properly.

![]()

Chances are you’ll be questioning how the wearables textures are being packed. As wearables are of dynamic nature, they don’t get pleasure from atlasing and the feel sharing between them is poor—virtually non-existent. The obvious optimizations are:

- Packing all textures right into a runtime generated atlas.

- Utilizing a 2D texture array and placing all of the wearable textures in runtime.

The difficulty with these approaches is that producing and copying texture pixels in runtime may be very costly because of the CPU-GPU reminiscence bottleneck. The feel array strategy has one other limitation: its texture components can’t simply reference different textures, they should be copied and all of the textures of the array should be of the identical measurement.

As well as, creating a brand new texture for every avatar is reminiscence intensive, and the present shopper heap is restrained to 2 GB on account of emscripten limitations—it may be prolonged to 4 GB however solely from Unity 2021 onwards, as they’ve up to date emscripten on that model. Because the contributors’ purpose was to assist no less than 100 avatars on the identical time, this was not an choice.

To keep away from the feel copy points altogether, a less complicated however extra environment friendly strategy was taken.

Within the avatar shader, a texture sampler pool makes use of 12 allowed sampler slots for the avatar textures. When rendering, the wanted sampler is listed by utilizing customized UV knowledge. This knowledge is specified by a manner through which albedo and emission textures are recognized by utilizing the totally different UV channels. This strategy permits a really environment friendly packing. As an example, the identical materials may use 6 albedo and 6 emissive textures, or 11 albedo and a single emissive, and so forth. The avatar combiner takes benefit of this and tries to pack all of the wearables utilized in essentially the most environment friendly manner attainable—the tradeoff being that branching needs to be used within the shader code, however fragment efficiency shouldn’t be a difficulty, so the funding returns are exceedingly constructive.

![]()

![]()

High picture, earlier than the development (120ms / 8FPS). Backside picture, afterwards (50ms / 20FPS).

The avatar rendering contributions are public and out there on the next PRs (1, 2)

Conclusion

After these enhancements, a efficiency enhance of 180% (from a median of 10FPS to a median of 28FPS) was noticed when having 100 avatars on display.

A few of these enhancements, just like the throttled GPU skinning, could also be utilized to different animated meshes inside Decentraland sooner or later.

These huge efforts to permit 5x extra avatars on display (from 20 to 100) for the Competition at the moment are part of the Decentraland explorer for good and they’ll proceed so as to add worth to in-world social experiences.

Assist Us through our Sponsors